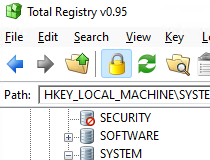

When working with the Registry, you should always create a backup just in case you run into an issue. Total Registry Replacement for the Windows built-in Regedit.exe tool. There are so many features and options available with this app that you will be hard-pressed to go back to using the default. Total Registry also allows you to perform undo/redo, copy/paste of keys/values, optionally replace RegEdit, connect to remote Registry, and view open key handles. You can also view MUI, and REG_EXPAND_SZ expanded values, perform full searches (Find All / Ctrl+Shift+F), and enhanced hex editor for binary values. It shows you key icons for hives and inaccessible keys/links it also displays key details like last write time and many keys/values for more accuracy. With Total Registry, you can show the actual Registry (not just the standard one) and sort the list view by any column desired. Total Registry's goal is to provide those improvements from a smooth new UI featuring options that you have probably thought to yourself at one time or another, "why isn't this here" when using the default Regedit. The default Regedit helps perform many different actions about the registry, but there can be improvements with all things. Total Registry gives you a nicely enhanced Open Source Regedit replacement. Or just train a model with a one liner if the environment is registered in Gymnasium and if the policy is registered: from stable_baselines3 import PPO model = PPO ( "MlpPolicy", "CartPole-v1" ). render () # VecEnv resets automatically # if done: # obs = vec_env.reset() predict ( obs, deterministic = True ) obs, reward, done, info = vec_env. reset () for i in range ( 1000 ): action, _states = model. learn ( total_timesteps = 10_000 ) vec_env = model. make ( "CartPole-v1" ) model = PPO ( "MlpPolicy", env, verbose = 1 ) model. Here is a quick example of how to train and run PPO on a cartpole environment: import gymnasium from stable_baselines3 import PPO env = gymnasium. Most of the library tries to follow a sklearn-like syntax for the Reinforcement Learning algorithms using Gym. We also hope that the simplicity of these tools will allow beginners to experiment with a more advanced toolset, without being buried in implementation details. We expect these tools will be used as a base around which new ideas can be added, and as a tool for comparing a new approach against existing ones. These algorithms will make it easier for the research community and industry to replicate, refine, and identify new ideas, and will create good baselines to build projects on top of. It is the next major version of Stable Baselines. Stable Baselines3 is a set of reliable implementations of reinforcement learning algorithms in PyTorch.

0 kommentar(er)

0 kommentar(er)